Context Tracker AI

Because Great Ideas Shouldn't Get Lost in the Flow!

Ever found yourself wondering "How did I spend 4 hours fixing that tiny bug?" or "Why didn't I document the setup process?" You're not alone. As developers, we often start with good intentions to document our work, only to get lost in the maze of problem-solving, leaving our future selves with little breadcrumbs to follow.

Context Tracker is my solution to this universal developer struggle - a tool that automatically captures and preserves your train of thought while you work / studying something new / exploring or researching a new topic, you should be able to seamlessly track this journey while requiring minimal effort outside of the task at hand.

TL;DR : Context Tracker is a automated documentation tool that captures your learning journey, research paths, and development processes in real-time. Perfect for developers, researchers, and anyone who wants to preserve their train of thought without disrupting their flow. Demo Video

How did it come to this? AI Solution'ism

Lets use AI to solve this problem (Yes i know its cliche now) . Quick backstory , I was messing around Claude's new "computer-use" api with open-interpreter and was quite fascinated but not very impressed. It was very difficult to prompt it and get it to do the exact thing you wanted. While grappling with this problem I asked myself the question "Could a human do it ?" ( and the answer was "maybe". There was definitely not enough specificity of instruction and without contextual knowledge any person would comeback to me with questions. So the things i was trying to automate were exactly the ones i did not document! Hence, I believe it would be more useful for me to just generate documentation and have a "prompt" instruction list which can be followed to do a certain task, either by a human or an AI ( and maybe even to my own future self ). The wonderful aspect of this idea is that , I would want to extract a lot more than just instruction lists and sequence of tasks from my own activity. I could "maybe" start catching my "AHA!" moments , long trains of thought , contextual mind-space of when i switch between tasks etc. but most of all, I could start writing more! I would have all the necessary raw material to no longer be intimidated by the vast fears of an empty page!

From Scattered Notes to Structured Thoughts

The only things i used to dump my thoughts into were README.md files, behemoth multi-line comments ,local scratch files which I .gitignore'd (and sometimes committed) , random notepad apps and occasionally google docs, much clutter. Around the same time I started harbouring the idea of building in public, incrementally, talking about what you tinker with, all got me wanting to post more on my X ( and recently substack notes! ). Imagine being able to trace the evolution of ideas, seeing how a random tweet, a brief mention from a YouTube video or GitHub Issue led to new discoveries! Context Tracker aims to evolve into this vision - a true "curiosity journal" that captures not just what we do, but how our thoughts and directions evolve over time.

What is Context Tracker ? What it can do for me right now?

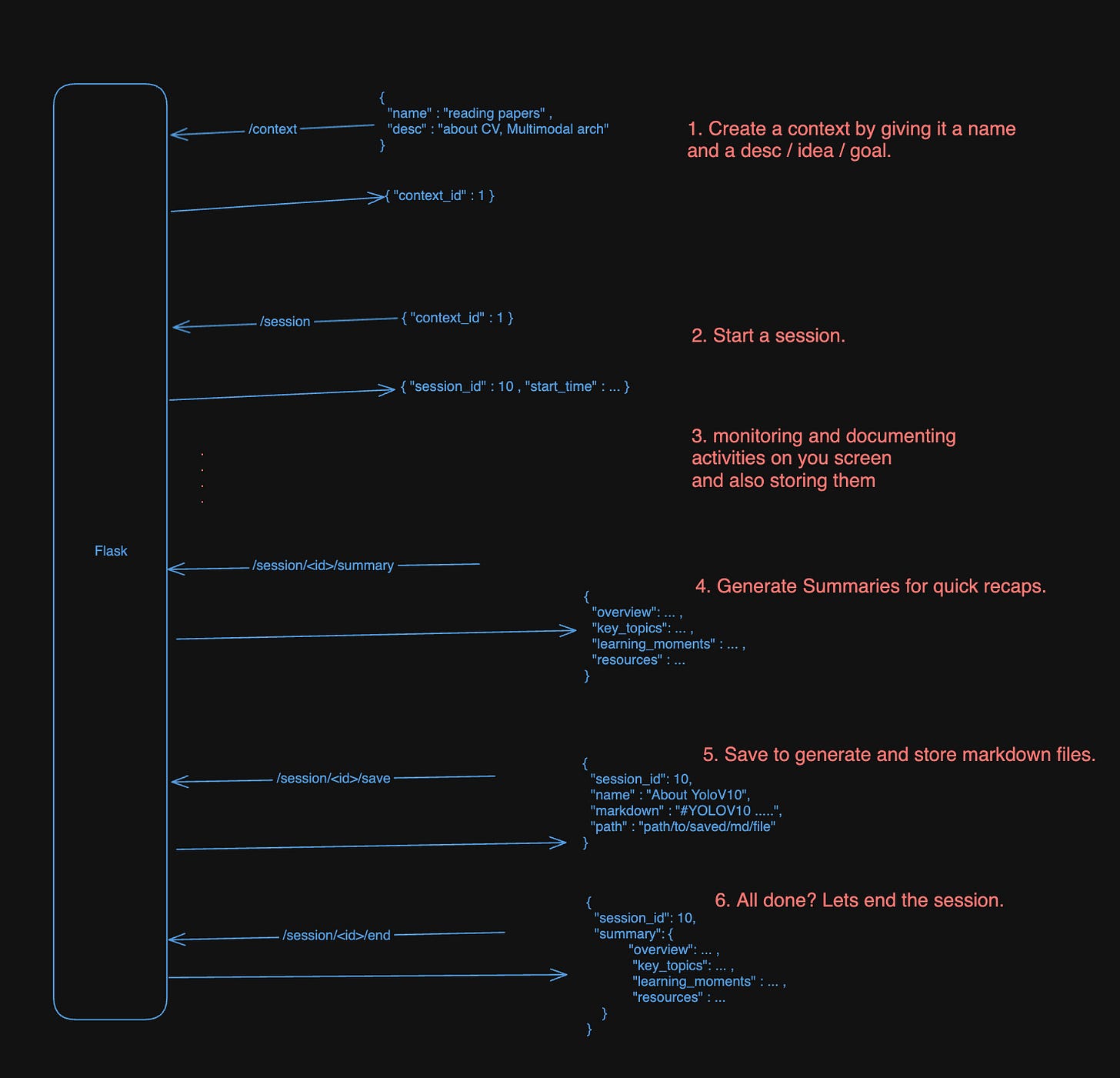

So at the time of writing this, its a simple flask server and a react app which acts as a raycast plugin, but technically the apis from the flask server can be used by any frontend to the same purpose.

Key Ideas

Establishing ideas for what some commonly repeated words:

Context : A single unique bucket // train-of-though // course-of-action , which you would set your mind to , the things that would be the overarching theme.

Session: One instance of taking a crack at the

context, maybe you come back and work on your "Study Math" every night after dinner or revisit "Side Project XV" every Saturday. Every window of time you sitting down with the singular focus on acontext, is asession.Events : A quanta , a literal snap shot ,the smallest temporal unit of screen(and hopefully mind) space that gets recorded.

Under the Hood

The basic workflow for the app is as follows:

Watch it in action in this demo video!

Future Roadmap

This still a quick and dirty hack, but already does a lot for me! But having the following would absolutely blow it away, and hopefully something I ( or you can too! its OSS) add to it:

Support custom instructions for summary/md generation

This is something that is already supported on the API but was not able to add on the ray-cast plugin. Would be awesome to get this and direct the summary generation via the lens of the user.User triggered Events

Sometimes the 15s frequency does not capture the full picture, sometime i want to write down my thoughts , maybe pick an exact screen highlighting text or assert some info. This is currently not possible , but should be fairly easy to add by exposingsave_event()fromstorage.pyas an api and a nice UI to record the users inputs.Add support for local models

This is something at the top of the list for me, getting really cheap vision llm inferences would mean I could run this all day! Especially with the new llama3.2 vision and LLaVA models!!!

And a ton of other side quests within this project which I may never find the time for! I even imagined having gpt-o1 (since it claims it could reason) to selectively intervene with a voice whenever it would not understand why I was doing something based on previous sequence of events and I would be able to verbally justify (and record) the thought!

But this sure is fun and looks like i would build out some more in the near future, keep an eye out on the github repo for more of the latest stuff.

Links:

Inception of the idea with this X post

Github: Context-Tracker (Backend)

Github: Raycast Plugin Context-Tracker